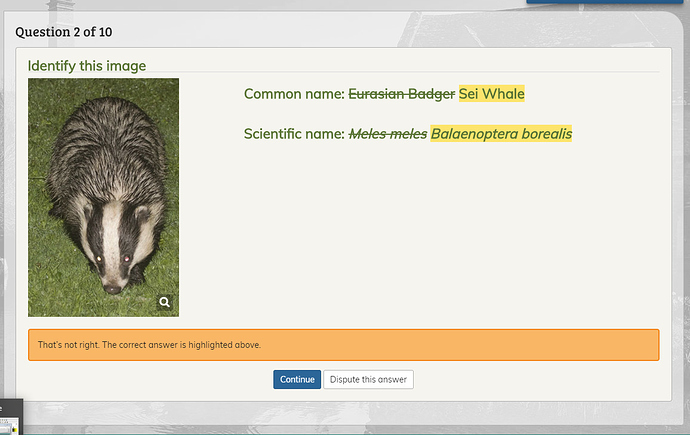

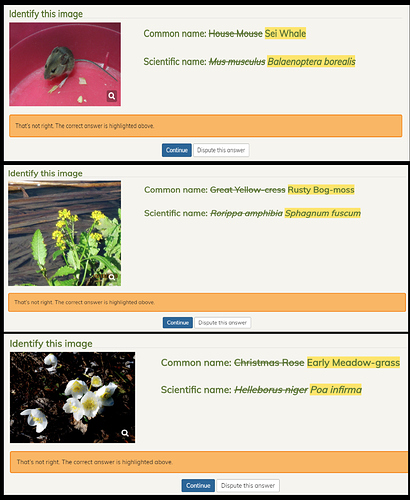

If you look at the Quiz tab at Your iSpot, it has the correct score, but they’re rounded down on the quiz finish page. (And I suspect that the histogram is based on fake data, rather than real data.)

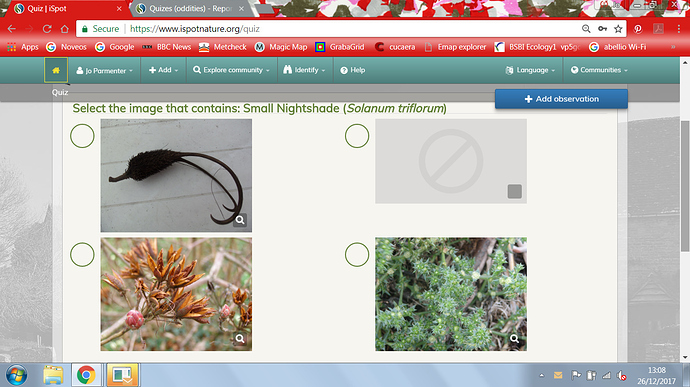

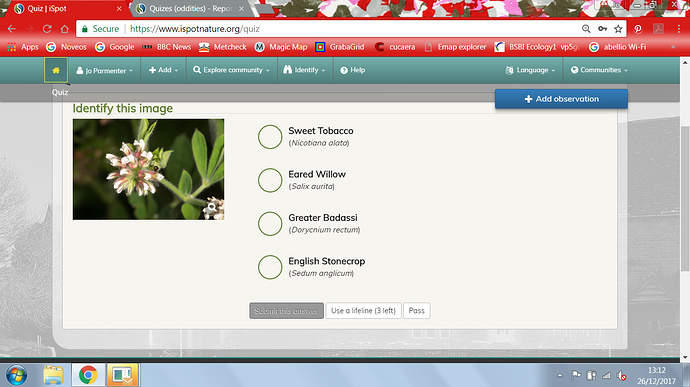

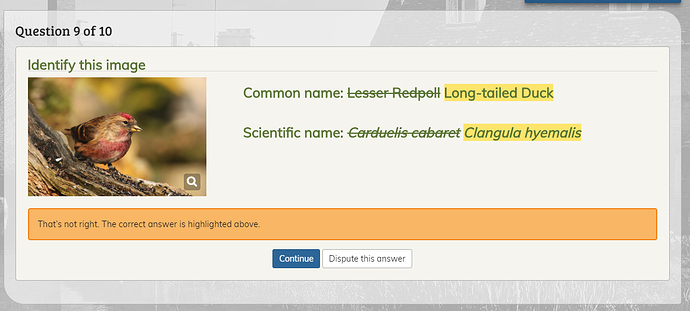

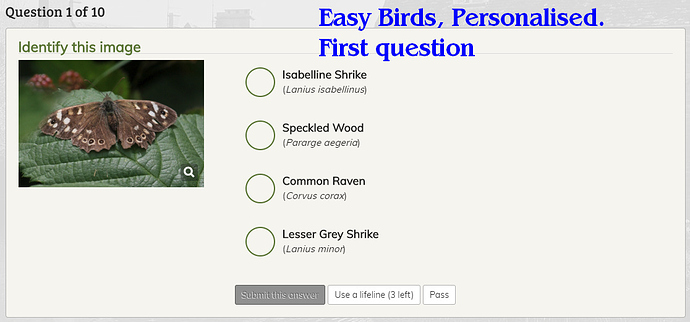

The multiple choice questions are generally easy for experts and approaching experts (they’re effectively identify the family questions). For your first screen grab I assume that the blank image was the correct answer, as none of the others is, as far as I can tell from the thumbnails, a Solanum (but I can’t identify any from the thumbnails; is one Rhododendron luteum?). For your second screen grab, I have the advantage of knowing that Dorcynium is a legume genus.

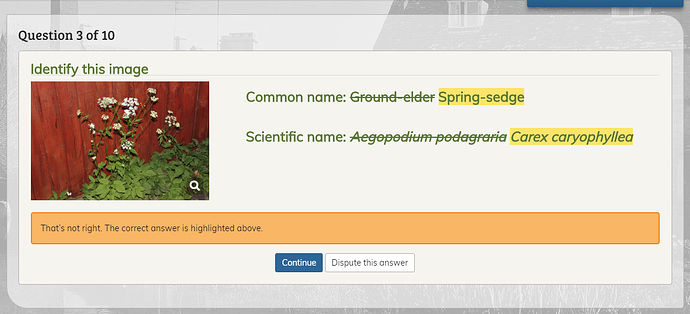

A problem is that the iSpot plants category covers 3 different field naturalist communities, vascular plants, mosses and algae, and cross community questions are not easy, except that most people have some knowledge of the flowering plant subset of vascular plants.

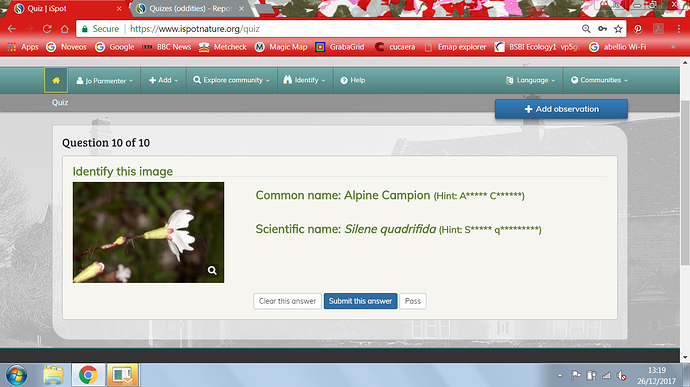

With your last question the plant can be identified as a silenoid, but I’m not sure I could distinguish it as Silene rather than Dianthus from that photograph. How do you exploit the hint? After some thought I came up with hitting the Euro+Med database. (Alternatively WikiPedia sometimes gives lists of species for genera.)

I got a Solidago gigantea to identify. It was on the edge of identifiability from the photograph. (In the field I use touch - leaf hairiness - and optical aid - upper stem indumentum - to distinguish the two species.) I agree that it’s too much to ask a beginner to do.

I strongly suspect that quizzes aren’t geographically restricted; some overseas images could sneak in because observation have historically ended up in the wrong community, and others have badly misplaced locations, but there’s too many non-British plants turning up for that to be the case.

Setting up a quiz without domain knowledge (e.g. which groups are critical and which groups are easy) is difficult, but the old iSpot made a decent stab at it. The new one looks as if it’s trying to produce the questions using simple mechanical rules, such as pick 4 taxa from the group. (Pick 4 taxa from one order on the intermediate quizzes.)